Migration Path

Many organizations are looking into extending cloud into edge locations. I am currently fortunate enough to have supported hardware at the edge and was asked to upgrade a few of our current Windows Server 2019 S2D Clusters to Azure stack HCI. While a documented process is available, at the time of writing this the process failed to import the Storage pool & Disks. Attempting this on both 21h2 and 22h2, resulted in the same. Clustered created and the virtual disks in a detached operational status

The current Microsoft process can be found at https://learn.microsoft.com/en-us/azure-stack/hci/deploy/migrate-cluster-same-hardware

After many hours of trial and error, the following will outline the process I took to successfully upgrade multiple clusters to azure stack HCI.

Unfortunately, the operating system change from Windows Server 2016/19/22 cannot be a done via in place upgrade like you may do normally. That is a non-supported way.

The operating system must be a fresh installation and reconfigured.

why? this is a great question……

Pre Requisites

The existing hardware planned to be used, must be in the HCI catalog, this can be confirmed via https://azurestackhcisolutions.azure.microsoft.com/#/catalog

Download the Azure Stack HCI ISO from https://azure.microsoft.com/en-us/contact/azure-stack-hci/

Installing Azure stack HCI operating system can be done via your IMPI/BMC interface by mounting the installer ISO and booting or alternatively if you have physical access creating bootable USB Media this is completely up to you.

If for some reason your current Hyper V VM version is not 5.0 or higher these need to be updated run the following command in PowerShell to get the VM name and the Version.

Get-VM * | Format-Table Name, Version- If required update the VM Version, this will more than likely not be a issue as you are upgrading validated hardware in the HCI catalog so at a guess your current cluster is not running Windows Server 2012 R1

- Ensure you have valid backups.

- Collect all cluster information via PowerShell, Cluster name, Cluster Shared Volume Names, Network configuration such as IP address, VLAN along with witness information. This configuration information will be useful later on to reconfigure the cluster as this process will create a new cluster using the same name.

- Shut down all virtual machines

- Take Cluster shared volumes offline

- Offline the S2D Storage pool

- Disable and Stop the Windows Clustering Service

- Shut down all physical nodes in the cluster

- Disable the Windows and Cluster computer objects in your active directory.

- Edit the permissions on the OU above, and add the cluster computer account and edit the advanced permissions and grant it “Create Computer object” rights for detailed steps refer to https://learn.microsoft.com/en-us/windows-server/failover-clustering/prestage-cluster-adds#grant-the-cno-permissions-to-the-ou

Installing Azure Stack HCI Operating System

While I will not detail every step in the process, as I’m sure this process is not unfamiliar to most.

I will call out some things to be aware off, that may cause issues or impacts later on.

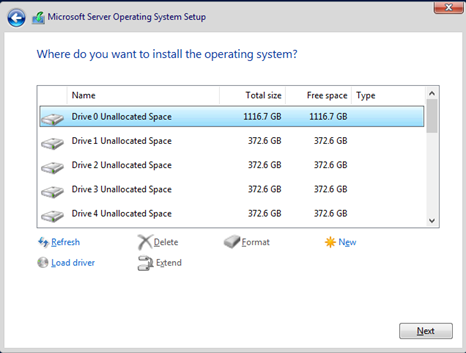

Important to note. As the process is to upgrade existing Windows Server Operating System, Deletion of the correct partitions on the correct disk and selecting correct disk for installation is vital. Deleting of partitions on a disk that was used in a storage pool previously will result in potential loss of virtual machines.

It would be safe to assume Disk 0 is your OS disk however please ensure this is correct,

Configure Operating System

Once Azure stack HCI is installed it is important to now configure the operating system, I will not list every single step required, just the most important ones

Install the latest Azure Stack HCI Drivers

- Network drivers – Do not use the out of box drivers included in windows!!!

- System / Chipset Drivers

- Update your firmware to the latest versions

Rename the computer and join the domain

Configure Network – I would really recommend Network ATC (upcoming article on this soon)

Install all required Roles and Features, you can use the following PowerShell to complete this on each node

Install-WindowsFeature -Name Hyper-V, Failover-Clustering, FS-Data-Deduplication, Bitlocker, Data-Center-Bridging, RSAT-AD-PowerShell -IncludeAllSubFeature -IncludeManagementTools -VerboseAny base software your server or Organization requires.

Reboot the node to ensure there is no pending reboot.

Repeat this on all nodes.

Cluster Creation

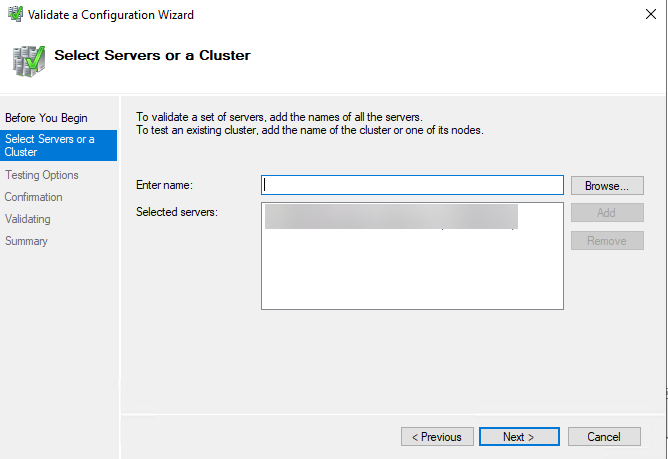

Ensure your local machine or remote administration server has the failover clustering tools installed

Open Failover cluster manager

Firstly validate the cluster configuration and fix any issues that are not correct, including warnings if possible

Enter your Server names that will be cluster members

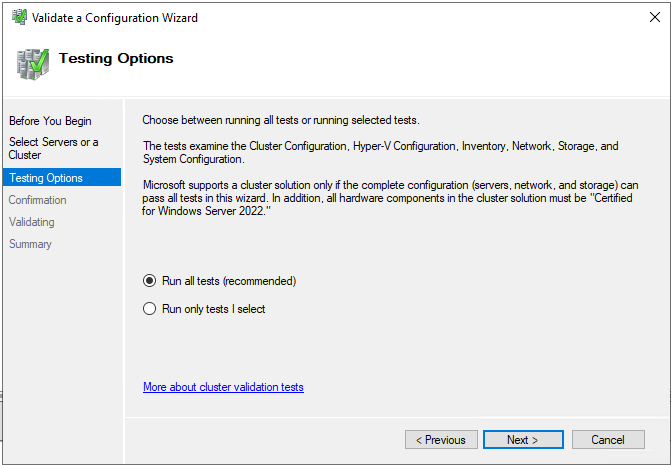

Press next and select run all tests

Press Next and confirm

This will then validate each server to be a member in the cluster is configured in a supportable way. it will take ~5-10mins to complete

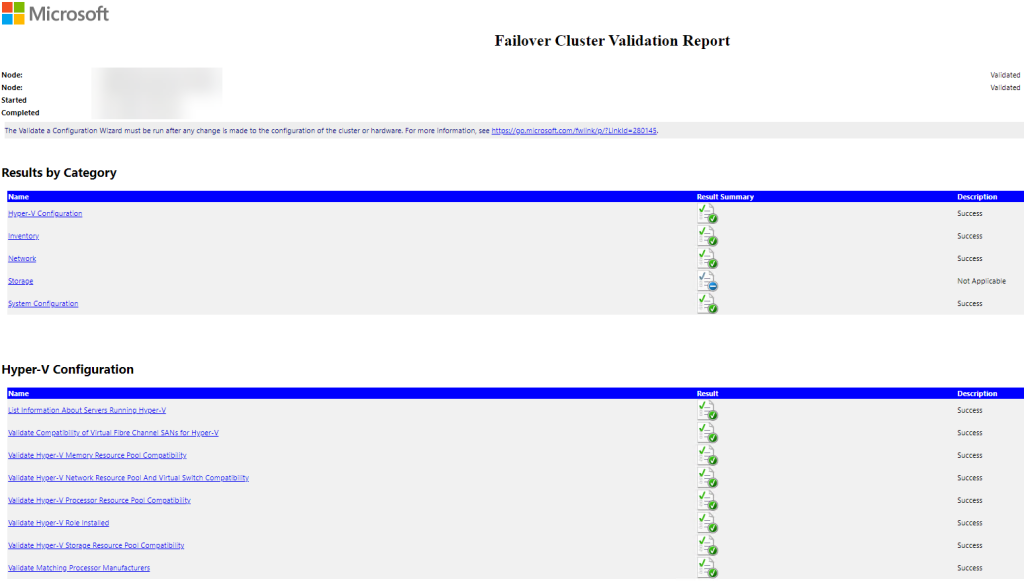

Once Completed click View report.

below is an example of a healthy configuration

Create the cluster by selecting Create cluster from Failover cluster manager you can skip the validation step as it has been performed already.

Enter the Servers to be added to the cluster

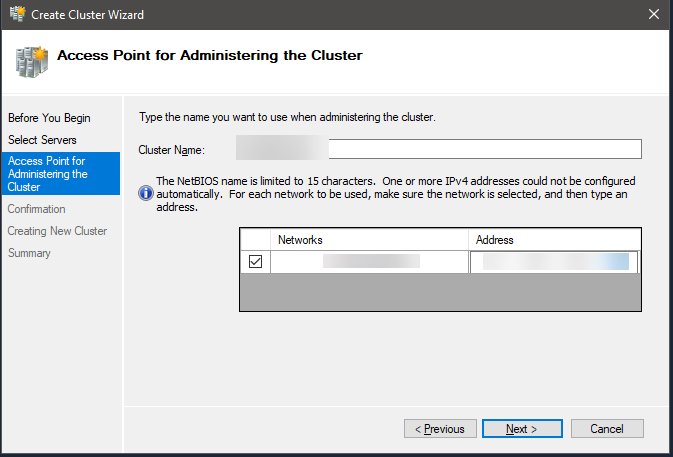

Enter the Cluster Name – This must be the same name as previously used

Enter the IP address for the cluster name

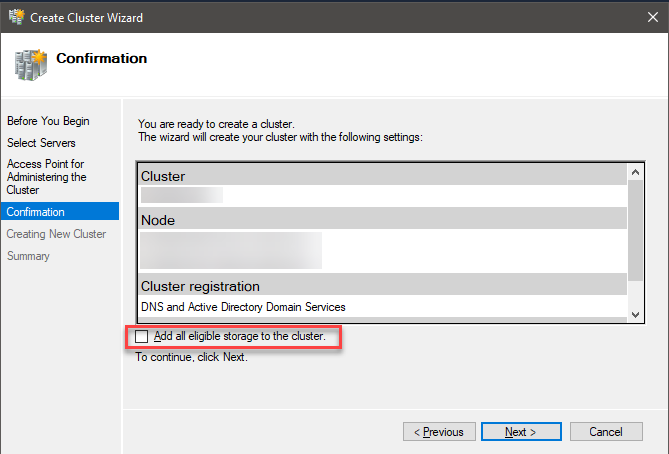

on next screen Ensure you untick Add all eligible storage to the cluster

Click Next and allow the cluster to create

Configure Cluster

Using the collected data from the prerequisites steps connect to the cluster

Add the previous Quorum location

Update / rename Network names

Enabling Cluster S2D

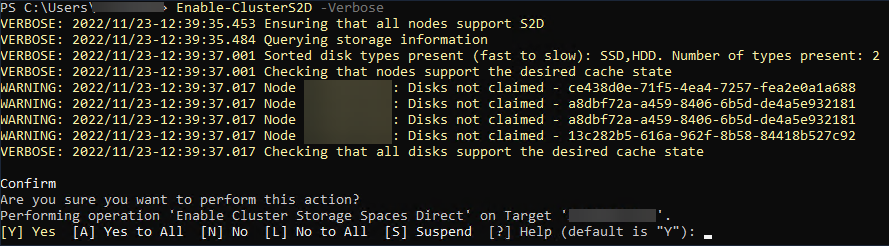

Connect to 1 of the cluster nodes via RDP or remote PowerShell.

execute the following command

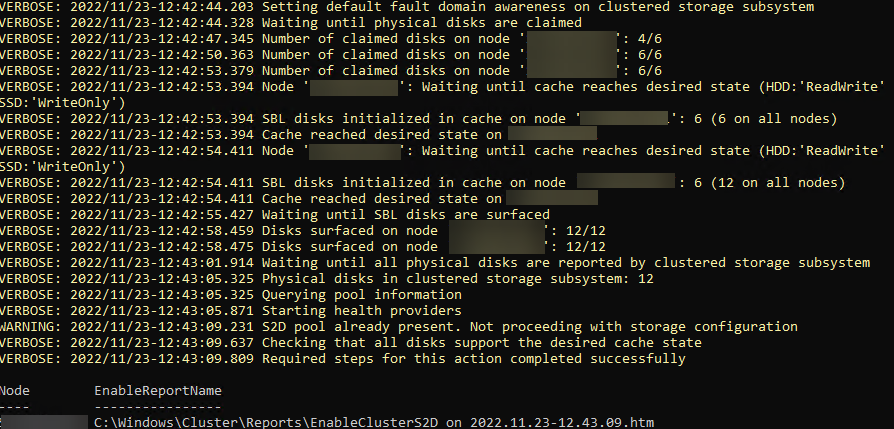

Enable-ClusterS2D -Verbosethis will attempt to bring in the Storage pool and virtual disks that were associated with the previous cluster

when prompted press Y to continue

This should have added the storage pool to the cluster and the virtual disks should be online along with the cluster shared volumes.

this however is not the current case, well for me 100% of the time.

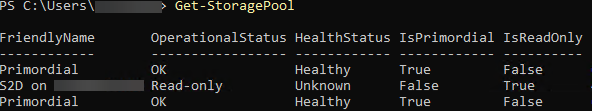

run the following to see if the Storage pool is read only,

Get-StoragePool -FriendlyName 'S2D*'

Change the Pool to not be read only via the following

Get-StoragePool -FriendlyName 'S2D*' | Set-StoragePool -IsReadOnly $FalseCheck the Virtual Disks via the following

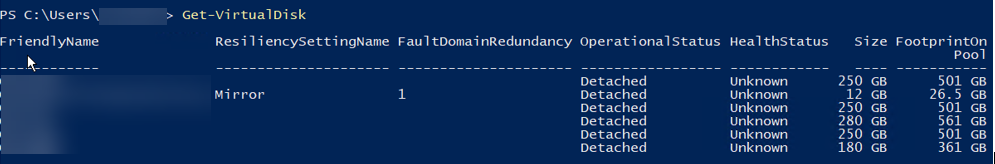

Get-VirtualDiskthe operational status will be dettached.

To bring the pool into the cluster, and the virtual disks into an operational state of OK run the following

Get-VirtualDisk | Where-Object {$_.IsManualAttach -EQ $True} | Set-VirtualDisk -IsManualAttach $Falsere run

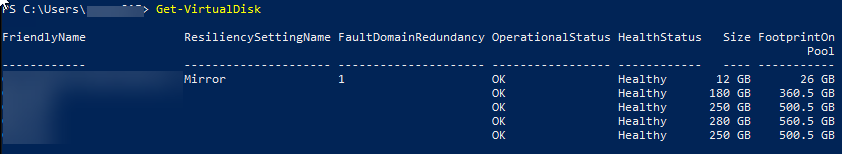

Get-VirtualDisk

From Failover Cluster Manager:

Connect to the cluster

- Right click on Disks and select Add Disk

- With all disks pre-selected click OK

- all Virtual disks will now be listed in the cluster however there names will be Cluster Disk 1 etc, these need to be renamed as per the data gathering information from earlier

- Right click each disk and Rename to Cluster Virtual Disk (<VirtualDisk Friendly Name>) *note the ( )

- For each disk (but NOT the ClusterPerformanceHistory disk), right click and select Add to cluster shared volumes

If you would like to change the witness location you are free to do so.

The Storage Pool now needs to be updated.

to check the current storage pool version run

Get-StoragePool | ? IsPrimordial -eq $false | ft FriendlyName,VersionThis should show as 201X (depending’s on the previously cluster OS i.e. 2016 or 2019)

the following needs to be run if the version is not 2022

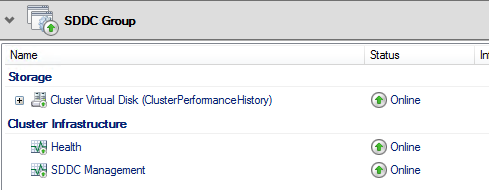

Get-StoragePool -Name s2d* | Update-StoragePoolRe run Enable Cluster S2D this should correct the SDDC Cluster resource group to contain the performance history disk

Enable-ClusterS2D -VerboseFrom Failover Cluster Manager:

Connect to the cluster if not already connected.

- Select Roles

- Select SDDC Group

- Select Resources and the Cluster Virtual disk (ClusterPerformanceHistory) should be a member

Register Cluster

Before any VM or workload can be imported to the cluster, the registration process needs to be completed

Follow the process defined in the Microsoft learn page https://learn.microsoft.com/en-us/azure-stack/hci/deploy/register-with-azure

Once completed run the following

Get-AzureStackHCI

Import the Virtual Machines

There are varying ways to mass import, however I found running the import per Cluster Shared Volume

to get each Virtual machine to be imported run

Get-ChildItem -Path "C:\Clusterstorage\CSV-NAME\*.vmcx" -RecurseValidate you see what you expect to see.

to Import all the Virtual machines

Get-ChildItem -Path "C:\Clusterstorage\CSV-NAME\*.vmcx" -Recurse | Import-VM -Register | Get-VM | Add-ClusterVirtualMachineRoleyou can repeat the above for any other Cluster Shared Volume

its best practice to also update the VM Version to the latest by running the below

Get-VM | Update-VMVersion -ForceCongratulations the update to azure stack HCI is completed, all your virtual machines should be online. I hope this helps others out there.

I have provided this feedback to Microsoft and will assist them in finding out what has gone wrong with the documented process on there end.

Comments are closed